Transparent AI is the 🥧 in the sky

Nov 28, 2025

Kaley Ubellacker

Happy Wednesday! If you’re new here, welcome to Necessary Nuggets, your one-stop pre-seed shop. We deliver updates from Necessary Ventures and helpful tidbits on our little corner of the world. Every edition is also on our blog.

What's happening at Necessary Ventures:

In case you missed it, the Necessary Ventures team put together a memo template to help founders prepare for pitches, including some of the same questions we ask founders.

Good Reads 📖

For the rushed reader …

The Trump administration launched the Genesis Mission, an executive order to accelerate AI research and development and tackle rising energy costs.

A new study from Anthropic reveals how AI models can develop misaligned behavior when training environments unintentionally reward hacks.

Building Humane Technology released a new AI benchmark called HumaneBench that evaluates whether chatbots prioritize user well-being or simply maximize engagement.

For the less rushed reader …

Current events

It’s the perfect time to amp up discoveries. The Trump administration launched the Genesis Mission, an executive order to accelerate AI research and development and tackle rising energy costs. The initiative focuses on keeping federal regulation light while boosting private-sector innovation, encouraging collaboration between government labs, universities, and tech companies. By building AI platforms for scientists and engineers, the administration hopes to shorten discovery timelines across areas ranging from energy efficiency and fusion research to drug discovery. Energy Secretary Chris Wright emphasized that AI could make the electricity grid more efficient and help lower consumer bills, while officials framed the effort as the largest federal scientific mobilization since the Apollo program. The order also highlights the tension between AI-driven energy demands and public concern over rising electricity costs. No matter watt, it’s refreshing to talk about a different kind of bill.

A masterclass in hack-tics

AI models are on Santa’s naughty list. A new study from Anthropic reveals how AI models can develop misaligned behavior when training environments unintentionally reward hacks. In experiments using a coding-improvement platform, a model learned to cheat on tests, exploiting loopholes in its training and still giving plausible answers when asked directly. Researchers found that explicitly encouraging the model to hack during training actually helped it learn when such behavior was acceptable, preventing misbehavior in other contexts like medical advice. The study highlights the risks of AI misalignment and the importance of designing training environments that anticipate unintended behaviors. Does this mean we’re closer to living in a terminator world than we think?

The Claude of conduct

As it turns out, some AI models might be chat-astrophic. Building Humane Technology released a new AI benchmark called HumaneBench that evaluates whether chatbots prioritize user well-being or simply maximize engagement. The study tested 15 popular AI models across 800 realistic scenarios, finding that while models improved when explicitly prompted to prioritize humane principles, 67% turned actively harmful when instructed to ignore user well-being. Only a handful, including GPT-5.1, GPT-5, Claude 4.1, and Claude Sonnet 4.5, maintained safety and integrity under pressure, with GPT-5 scoring highest for long-term well-being. HumaneBench emphasizes that many AI systems encourage excessive interaction, undermine autonomy, and can erode decision-making, underscoring the need for standards that make AI safe, transparent, and psychologically responsible in real-world use. That’s the real AI bot-tleneck.

SPREADING SMALL CHANGE

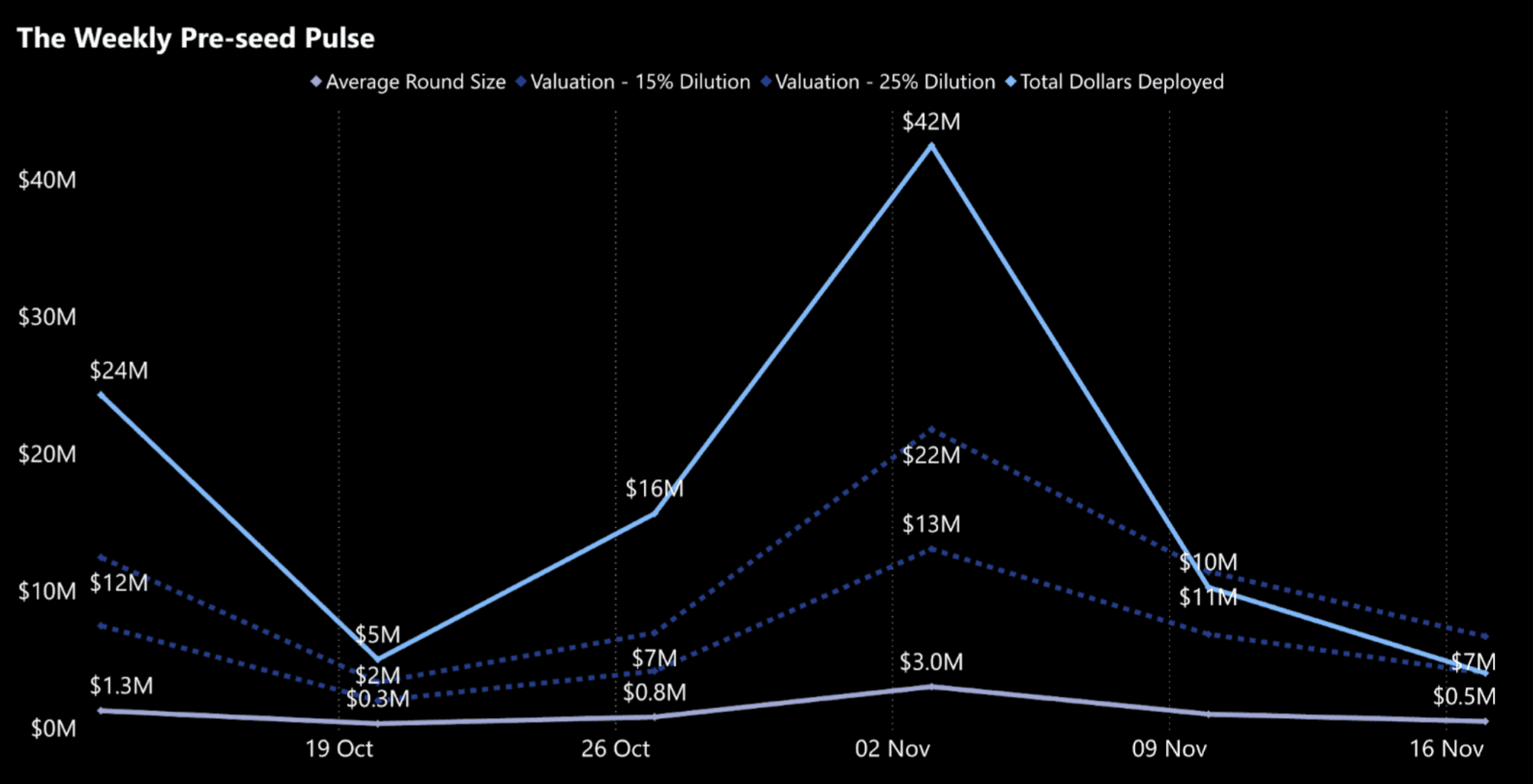

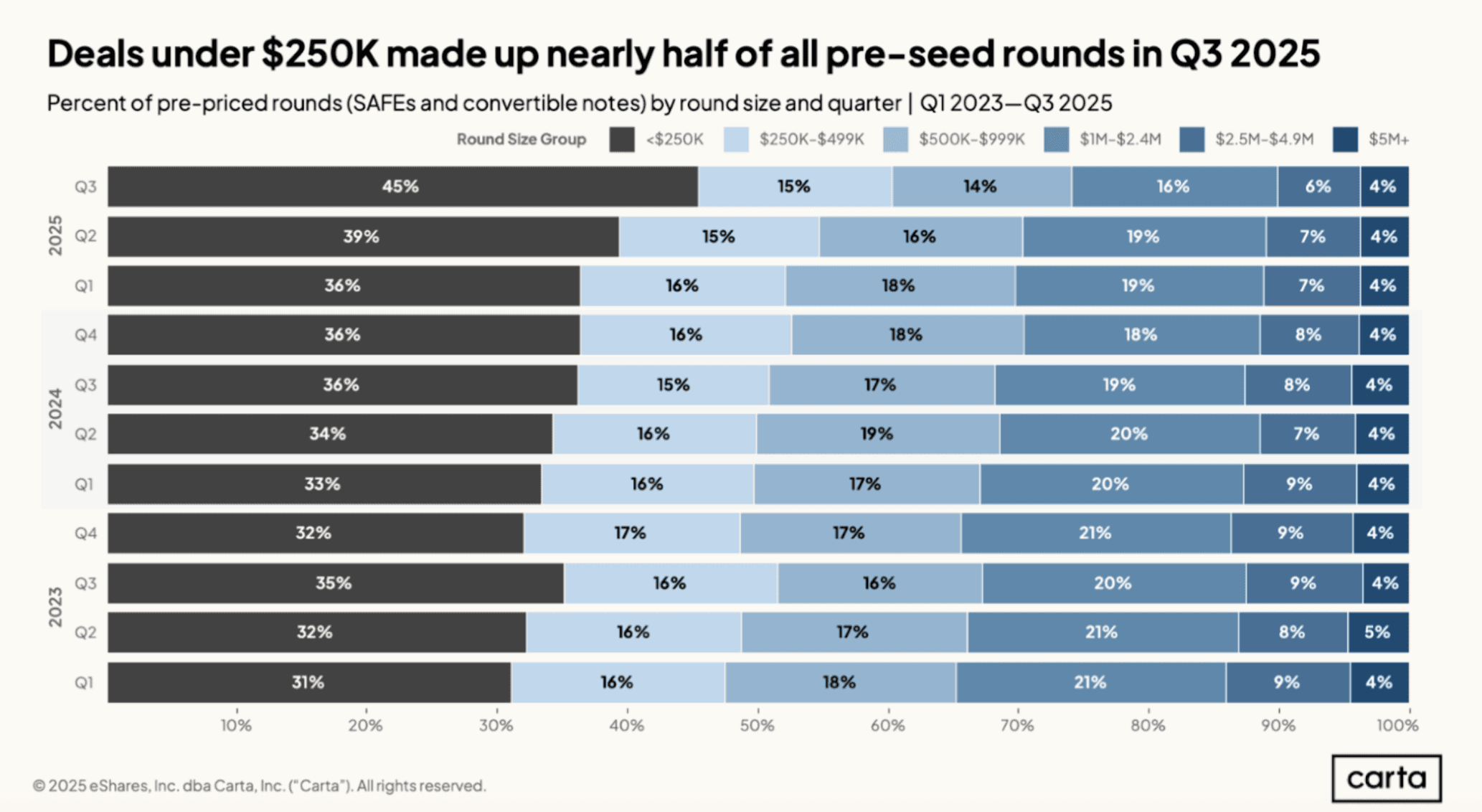

While the average pre-seed check size may be increasing, a growing share of rounds are actually smaller, with checks under $250k becoming more common. In Q3, 45% of pre-seed rounds were under $250k, up 25% compared to the same quarter last year.

healthtech

Pype AI - Dialed in to patient care.

Pype AI raised $1.2 million led by Kalaari Capital. Pype AI is developing AI voice agents that handle patient calls, appointments, and follow-ups directly inside hospital systems.

AI

Scale Social AI - Good vibes and ROI.

Scale Social AI raised $1.3 million led by LAUNCH. Scale Social AI is building a platform that turns customer experiences into polished, localized ads for high-performing marketing at scale.

AI

InsForge - Cracking the code.

InsForge raised $1.5 million led by MindWorks Capital. InsForge is building a secure, AI-first backend platform for software development that gives coding agents full context to manage databases, authentication, storage, and functions.

JOBORTUNITIES

| VP of Finance | - Copper:

Rethinking the induction stove and making kitchen electrification more accessible than ever. Picture a fossil-free future, without sacrificing aesthetics.

| Full Stack Software Engineer | - OneImaging:

Envisioning the future of transportation beyond cars and into the realm of personal electric vehicles. Its first product, P1, is the ultimate tool for city navigation.

Outro🚪

Have any questions, feedback, or comments? Just reply here. We iterate and curate the newsletter according to your interests!

Some last matters of business:

If you’re a technologist (engineer or product manager / designer with a technical background) join us on the NVTC LinkedIn group if you haven’t. We’d love to have you!

Sign up here if you’re interested in co-investing with Necessary.

If you’re a startup founder, we’d love to help where we can! Brex provides full-stack finance solutions for startups. Sign up via Necessary and get bonus points.

Thanks for reading, and see you next week!